Well, the conditions have changed recently so it's time to bring an update on this.

HTML 5 video tag

The HTML 5 draft supports a

<video> tag for embedding a video into a webpage as a native html element (e.g. without a plugin). Earlier versions of the draft even recommended that browsers should support Ogg/Theora as a format for the video. The Ogg/Theora recommendation was then removed and a lot of discussion was started around this. This wikipedia article summarizes the issue. Nevertheless, there are a number of browsers supporting OggTheora video out of the box, among these is Firefox-3.5.Cortado plugin

In a different development, the Cortado java applet for theora playback was written. It is GPL licensed and you can just download it and put it into your webspace.

Now the cool thing about the

<video> tag is, that browsers, which don't know about it, will display the contents between <video> and </video>, so you can include the applet code there. Researching a bit about the best way to do this, I read that the (often recommended) <applet> mechanism is not valid html. A better solution is here.Webmasters side

Now if you have the live-stream at

http://192.168.2.2:8000/stream.ogg your html page will look like:<html>

<head>

<meta http-equiv="content-type" content="text/html; charset=ISO-8859-1">

</head>

<body>

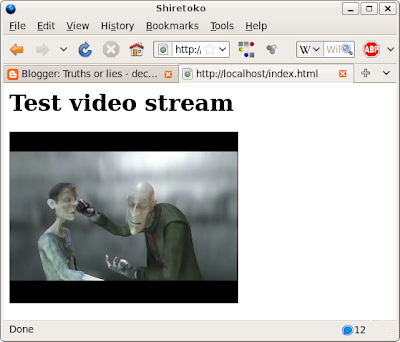

<h1>Test video stream</h1>

<video tabindex="0"

src="http://192.168.2.2:8000/stream.ogg"

controls="controls"

alt="Test video stream"

title="Test video stream"

width="320"

height="240"

autoplay="true"

loop="nolooping">

<object type="application/x-java-applet"

width="320" height="240">

<param name="archive" value="cortado.jar">

<param name="code" value="com.fluendo.player.Cortado.class">

<param name="url" value="http://192.168.2.2:8000/stream.ogg">

<param name="autoplay" value="true">

<a href="http://192.168.2.2:8000/stream.ogg">Test video stream</a>

</object>

</video>

</body>

</html>

Note that for live-streams the "autoplay" option should be given. If not, firefox will try to load the first image (automatically anyway) to show it in the video widget. Then it will stop downloading the live-stream until you click start. Pretty obvious that this will mess up live-streaming.

Server side

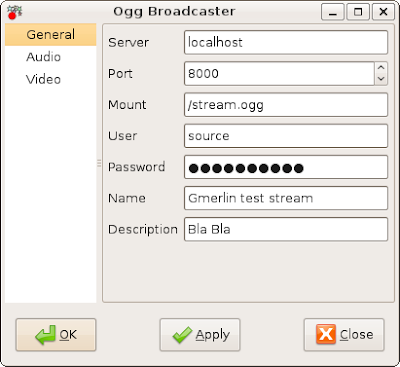

For live streaming I just installed the icecast server, which came with my ubuntu. I just changed the passwords in

/etc/icecast/icecast.xml, enabled the server in /etc/default/icecast2 and started it with /etc/init.d/icecast2 start.Upstream side

There are lots of programs for streaming to icecast servers, most of them use libshout. I decided to create a new family of plugins for gmerlin: Broadcasting plugins. They have the identical API as encoder plugins (used by the transcoder or the recorder). The only difference is, that they don't produce regular files and must be realtime capable.

Using libshout is extremely simple:

- Create a shout instance with

shout_new() - Set parameters with the

shout_set_*()functions - Call

shout_open()to actually open the connection to the icecast server - Write a valid Ogg/Theora stream with

shout_send(). Actually I took my already existing Ogg/Theora encoder plugin and replaced allfwrite()calls byshout_send().

Of course, some minor details were left out in my overview, read the libshout documentation for them. As upstream client, I use my new recorder application.

The result

See below a screenshot from firefox while it plays back a live stream:

Open Issues

The live-video experiment went extremely smooth. I discovered however some minor issues, which could be optimized away:

- Firefox doesn't recognize live-streams (i.e. streams with infinite duration) properly. It displays a seek-slider which always sticks at the very end. Detecting a http stream as live can easily be done by checking the

Content-Lengthfield of the http response header. - The theora encoder (1.1.1 in my case) might be faster than the 1.0.0 series, but it's still way too slow. Live encoding of a 320x240 stream is possible on my machine but 640x480 isn't.

- The cortado plugin has a loudspeaker icon, but no volume control (or it just doesn't work with my Java installation)

- With the video switched off I can send audio streams to my wlan radio. This makes my older solution (based on ices2) obsolete.

- The whole thing of course works with prerecorded files as well. In this case, you can just put the files into your webspace and your normal webserver will deliver them. No icecast needed.