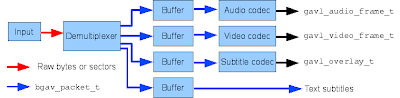

Input

The input module obtains the data. Examples for data sources are regular files, DVDs or DVB- or network streams. Usually the data is delivered in raw bytes. For DVDs and VCDs however, read and seek operations are sector based. Since both formats require that each sector must start with a syncpoint (an MPEG pack header) having sector based data in the demultiplexer speeds up several things.

Demultiplexer

This is, where the compressed frames are extracted from the container. During initialization, the demultiplexer creates the track table (

bgav_track_table_t). This contains the tracks (in most cases just one). Each track contains the streams for audio, video and subtitles. For most containers the demultiplexer already knows the audio and video formats. For others, the codec must detect it. This means you should never trust the formats before you called bgav_start().In some cases (DVD, VCD, DVB) the input already knows the complete track layout and which demultiplexer to use. The initialization of the demultiplexer can then skip the stream detection. In the general case the demultiplexer is selected according to the file content (i.e. the first few bytes). Some formats (MPEG, mp3) can have garbage before the first detection pattern, so we must repeatedly skip bytes before checking for one of these.

The demultiplexer has a routine, which reads the next packet from the input. Depending on the format, this involves decoding a packet header and extracting the compressed data, which can be handled by the codec later on. Some formats (rm, asf, MPEG-2 transport streams, Ogg) use 2-layer multiplexing. There are top-level packets which contain subpackets.

If the format is well designed, we also know the timestamps and duration of the packet and if the packet contains a keyframe. Not all formats are well designed though (or encoders are buggy), then we must do a lot of black magic to get as much information as possible.

Most demultiplexers are native implementations. As a fallback for very obscure formats we also support demultiplexing with libavformat.

Buffers

Gmerlin-avdecoder is strictly pull-based. If a video codec requests a packet, but the next packet in the stream belongs to an audio stream, it must be stored for later usage. For this, we have buffers, which are just chained lists of packets. They can grow dynamically. This approach makes the decoding process mostly insensitive to badly interleaved streams.

Interleaved vs. noninterleaved

The demultiplexing method described above reads the file strictly sequentially. This has the advantage that we never seek in the stream so we can do this for non-seekable sources.

Some files (more than you might think) are however completely non-interleaved, e.g. the audio packets follow all video packets. These always have a global index though. In this case, if a video packet is requested, the demultiplexer seeks to the packet start and reads the packet. This mode, which is also used in sample accurate mode, only works for seekable sources.

Codecs

These convert packets to A/V frames. In most cases, one packet equals one frame. In some cases (mostly for MPEG streams), the codec must first assemble frames from packets or split packets containing multiple frames. The codec outputs the gavl frames, which are handled by the application.

Codecs are selected according to fourccs. For formats, which don't have fourccs, we either invent them or we use fourccs from AVI or Quicktime.

Video codecs must care about timestamps. For MPEG streams the timestamps at multiplex level (with a 90 kHz clock) must be converted to the ones according to the video framerate. Audio codecs must do buffering because the the application can decide how many samples to read at once.

Text subtitles

There are no codecs for text subtitles. Each packet contains the string (converted to UTF-8 by the demultiplexer), the presentation timestamp and the duration.

Reading a Video frame

If an application calls

bgav_read_video, the following happens:- The core calls the decode function of the codec

- The codec checks if there is already a decoded frame available. This is the case after initialization because some codecs need to decode the first picture to detect the video format

- If no frame is left, the codec decodes one. For this it will most likely need a new packet

- The codec requests a packet. Either the packet buffer already has one, or the demultiplexer must get one

- In streaming mode, the demultiplexer gets the next packet from the input and puts it into the packet buffer of the stream to which it belongs. If the stream is not used, no packet is produced and it's data is skipped. This is repeated until the end is reached or we found a packet for the video stream. If the end is reached, the demultiplexer signals EOF for the whole track.

- In non-streaming mode the demultiplexer knows, which stream requested the packet. It seeks to the position of the next packet and reads it. EOF is signaled per stream.

Building file indices for sample accurate access can happen in different ways depending on the container format. In the end, we need byte positions in the file, the associated timestamps (in output timescale) and keyframe flags. The following modes are supported:

- MPEG mode: The codec must build the index. This involves parsing the frames (only needed parts) to extract timing information with sample accuracy. Codecs supporting this mode are libmpeg2, libavcodec, libmad, liba52 and faad2.

- Simple mode: The demultiplexer knows about the output timescale, gets precise timestamps and one packet equals one frame. Then no codec is needed for building the index.

- Mix of the above. E.g. in flv timestamps are always in millseconds. This is precise for video streams. For audio streams (mostly mp3), we need MPEG mode.

No comments:

Post a Comment