Downscaling images is a commonly needed operation, e.g. if HD videos or photos from megapixel cameras are converterted for burning on DVD or uploading to the web. Mathematically, image scaling is exactly the same as audio samplerate conversion (only in 2D, but it can be decomposed into two subsequent 1D operations). All these have in common that samples of the destination must be calculated from the source samples, which are given on a (1D- or 2D-) grid with a different spacing (temporally or spatially). The reciprocal of the grid spacings are the sample frequencies.

In the general case (i.e. if the resample ratio is arbitrary) one will end up with interpolating the destination samples from the source samples. The interpolation method (nearest neighbor, linear, cubic...) can be configured in better applications.

One might think we are done here, but unfortunately we aren't. Interpolation is the second step of downscaling. Before that, we must make sure that the sampling theorem is not violated. The sampling theorem requires the original signal to be band-limited with a cutoff frequency of half the sample frequency. This cutoff frequency is also called the Nyquist frequency.

So if we upscale an image (or resample an audio signal to a higher sample frequency), we can assume that the original was already band-limited with half the lower lower sample frequency so we have no problem. If we downsample, we must first apply a digital low-pass filter to the image. Low-pass filtering of images is the same as blurring.

The imagemagick solution

What pointed me to this subject in the first place was this post in the gmerlin-help forum (I knew about sampling theory before, I simply forgot about it when programming the scaler). The suggestion, which is implemented in ImageMagick, was to simply widen the filter kernel by the inverse scaling ratio. For linear downscaling to 1/2 size this would mean to do an interpolation involving 4 source pixels instead of 2. The implementation extremely simple, it's just one additional variable for calculating the number and values of filter coefficients. This blurs the image because it does some kind of averaging involving more source pixels. Also the amount of blurring is proportional to the inverse scale factor, which is correct. The results actually look ok.

The gavl solution

I thought what's the correct way to do this. As explained above, we must blur the image with a defined cutoff frequency and then interpolate it. For the blur filter, I decided to use a Gaussian low-pass because it seems the best suited for this.

The naive implementation is to blur the source image into a temporary video frame and then interpolate into the destination frame. It can however be done much faster and without temporary frame, because the 2 operations are both convolutions. And the convolution has the nice property, that it's associative. This means, that we can convolve the blur coefficients with the interpolation coefficients resulting in the filter coefficients for the combined operation. These are then used on the images.

The difference

The 2 solutions have a lot in common. Both run in 1 pass and blur the image according to the inverse scaling ratio. The difference is, that the imagemagick method simply widens the filter kernel by a factor while gavl widens the filter by colvolving with a low-pass.

Examples

During my research I found this page. I downloaded the sample image (1000x1000) and downscaled it to 200x200 with different methods.

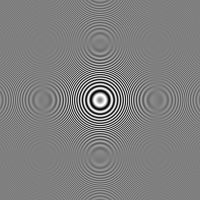

First the scary ones:

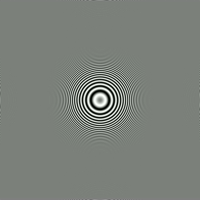

Now it gets better:

Gimp (linear, grayscale mode)

Gimp (linear, grayscale mode)

mplayer -vf scale=200:200 scale.movThe movie was made with qtrechunk from the png file.

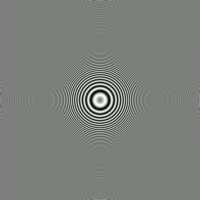

gavl with imagemagick method (

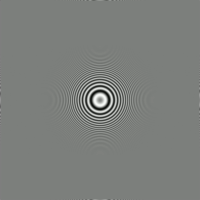

gavl with imagemagick method (GAVL_DOWNSCALE_FILTER_WIDE) gavl with gaussian preblur (

gavl with gaussian preblur (GAVL_DOWNSCALE_FILTER_GAUSS)

3 comments:

Fun, I love being able to see pictures :-D

One thing worthy of note: you do not seem to be doing any gamma correction! All your images end up as gamma 1.0, while my (and most people's) monitors expect gamma 2.2. This is why in all images (especially the last image), you get this light-grey center, and the solid color around the box appears darker. Now, if you color-correct the images from linear to 2.2, they look much better in terms of blending together - both the center and the solid color look the same.

Also, you can specify a gamma 1.0 in the png image itself so the browser will know that it needs to do color correction, but not all browsers support that, as far as I know.

Gamma correction is not related to scaling methods. The algorithms however assume, that there is a linear relationship between the color channels and the intensities. Otherwise, the interpolation cannot be done in a simple way. Now one can discuss if scaling has to be done before or after gamma correction, but that would be another project.

Post a Comment